Google I/O 2024 Announcements: Going All In On AI

At its I/O 2024 event on Tuesday, Google unveiled a number of significant updates, including the release of new Gemini and Gemma models, the most recent AI capabilities for Android, a new AI voice assistant, and a text-to-video generator.

Sundar Pichai and other Google executives made a number of AI-powered announcements this year from a stage in Mountain View, California. Google also revealed upgrades to the next iterations of Wear OS and Android, though with less hype.

Unsurprisingly, however, Tuesday's focus was nearly exclusively on software and artificial intelligence (AI), including Google Gemini and its numerous apps as well as what's on the horizon for Android. This Google I/O presentation had no mention of any hardware announcements or teases, in contrast to prior ones. Here are all the major announcements made by Google at the Google I/O 2024.

Project Astra

With the release of Project Astra, a brand-new AI system, on Tuesday, Google squared off against OpenAI. In a Google demo, the AI assistant could name the things in the room, recognize and clarify a certain section of the code, locate the object precisely by peering out the window, locate the user's glasses, and even come up with imaginative names for dogs. Furthermore, Google demonstrated how to integrate Project Astra with smart glasses or a smartphone, indicating that Google Lens may get a significant Gemini-powered update in the future.

When presented with queries in real time via text, video, pictures, or audio, Astra can retrieve the pertinent data to respond to them. It accomplishes this by bringing up data from the internet and the environment it detects using the camera on your smartphone.

It achieves this by first encoding audio and video frames into a timeline, after which it caches the information for recall. Put another way, it sees the world through the camera on your smartphone and, like humans, interprets, reacts to, and remembers it. It continues to do this even when the objects it was previously viewing are out of the frame of the camera.

Gemini 1.5

Google revealed that Gemini 1.5 Pro, its most recent generative AI model, is now accessible to Gemini Advanced members. In addition, Google has given the Gemini 1.5 Pro model—which is currently offered in more than 150 countries and over 35 languages—a number of new features.

According to Sissie Hsiao, vice president and general manager of Gemini Experiences and Google Assistant, “Gemini is designed to be your personal AI assistant — one that’s conversational, intuitive and helpful. Whether you use it in the app or through the web experience, Gemini can help you tackle complex tasks, and it can take action on your behalf.”

Similar to how they did with Maps and YouTube, Google is also tightly integrating Gemini into Calendar, Tasks, and Keep so users can take advantage of the generative AI features without ever leaving the apps.

Even more widely used Google products, such as Gmail, are getting the Gemini update, which can condense emails that are a part of prolonged email chains. Additionally, after reviewing your email exchanges, Gemini will be able to provide more contextualized responses thanks to a smart reply function.

Gemini 1.5 Pro makes use of its multimodal capabilities and sees a significant boost in image understanding. For example, by clicking on a photo of a meal at a restaurant, the AI can describe the recipe or provide a step-by-step explanation for a math problem from a textbook.

One of the Gemini 1.5 Pro model's new features is a bigger context window that can hold up to one million tokens.

This is said to be the world's longest context window for a consumer chatbot, enabling users to summarize up to 100 emails at once and read papers or books with up to 1,500 pages.

Google is even going to provide the capability to manage a codebase with over 30,000 lines of code and an hour-long video clip in the coming days.

In addition, Google is now allowing users to submit files into Gemini straight from a device or through Google Drive in order to obtain instantaneous information, insights, and responses. In this scenario, Gemini will function as a data analyst and utilize the uploaded data to create personalized chats and visualization models.

AI in Android 15

Google revealed that more AI features will be added to Android, even if they didn't particularly highlight or showcase any features related to Android 15. For instance, Circle to Search will be rolled out more widely. Additionally, TalkBack for Android is an AI feature that functions primarily as an accessibility aid by announcing photo explanations for people who are blind or have impaired vision.

Google is introducing a feature dubbed "Theft Detection Lock," which shuts down your smartphone and utilizes the accelerometers and "Google AI" to determine if it was stolen.

Additionally, Google is introducing the private space feature, which consolidates all of your apps that contain sensitive data into a single area on your smartphone by creating a distinct space.

According to Google, the private space is operated by a different user profile, which is suspended and the associated apps stop working when you lock it.

, Google said that it is testing a new call monitoring tool that will alert users if someone they are speaking with over the phone is probably trying to con them. When the function identifies that an unknown caller uses speech patterns linked to scams, it uses Gemini Nano to send alerts throughout the call. On the phone screen, Gemini will alert consumers with a red warning indicator that says "likely spam," requesting that they cancel the call and providing a brief explanation of why Gemini believes the call might be dangerous.

Google Veo

With the release of Veo, their most recent text-to-video generation model, Google posed an imminent challenge to OpenAI's Sora. The new model can produce movies in 1080p resolution with a variety of visual and cinematic effects. Because Veo is familiar with cinematic terminology like "timelapse" and "aerial shots of landscape," it will also allow users more control.

According to reports, Veo has the capacity to create video sequences up to and including 60 seconds from a single query or a sequence of prompts that construct a narrative. It can also apparently modify pre-existing videos using text instructions and retain visual consistency across frames. According to Google, it is capable of creating intricate scenarios and including cinematic elements like time-lapses, aerial views, and different visual styles.

Among the video generating models that Google claims Veo expands upon are Generative Query Network (GQN), DVD-GAN, Imagen-Video, Phenaki, WALT, VideoPoet, and Lumiere. Veo uses compressed "latent" video representations and more descriptive video captions in its training data to improve both quality and efficiency. In order to enhance the quality of Veo's video creation, Google added more thorough captions to the training movies, which helped the AI recognize cues more precisely.

AI in Google Search

Google is utilizing AI to update its search experience as well, made possible by a newly tailored Gemini model created just for this use. Using Google Search Generative Experience (SGE), the search giant is now offering short summaries on any specific search topic using AI overviews. This function was previously only available in experimental form.

Additionally, the company will launch a new AI-organized search results page with headlines that are categorized as "unique." Users in the US will be able to access the new version starting today. The new search page will first be made accessible for searches related to meals and recipes, then movies, music, books, hotels, and shopping.

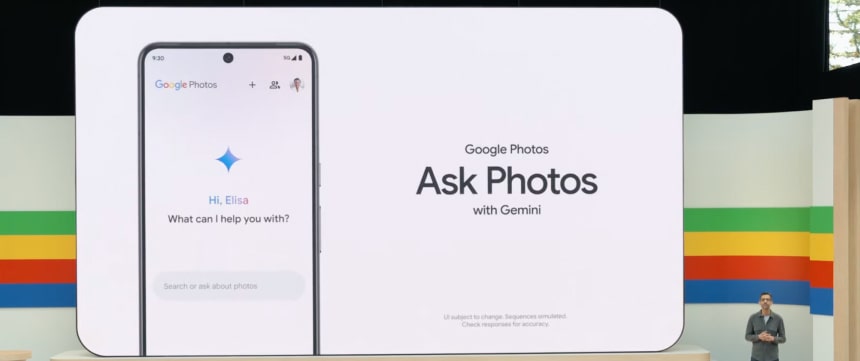

Ask Photos

Google is providing a Gemini AI-powered upgrade to Google Photos which will allow users to recall a specific picture based on a single prompt by the users. The new feature called ‘Ask Photos’ takes advantage of Gemini's multimodal abilities to understand the context and subject object of a picture and furnish the image requested by the user.

For instance, users can ask Gemini questions related to a specific event, object or person and the AI assistant will instantly scan through the user's gallery to scan for the corresponding images.

In addition to requesting the best photos from a trip or another event, users can pose queries that call for a nearly human-level comprehension of the content in their images.

If a parent were to ask Google Photos, for example, what themes they had used for their child's four prior birthday parties, the search engine would provide a straightforward response along with images and videos detailing the mermaid, princess, and unicorn themes that had been used in the past and when.

This kind of search is made feasible by the fact that Google Photos comprehends natural language notions such as "themed birthday party," in addition to the keywords you've typed.

Gemma 2

Google revealed major upgrades to its Gemma family of open-weight artificial intelligence models. The star of the show is Gemma 2, a new generation that will go on sale in June and has a 27-billion parameter model. Compared to the current 2-billion and 7-billion parameter versions, this is a significant increase. Additionally, Google unveiled PaliGemma, a pre-trained Gemma variation intended for image-related activities including labeling, captioning, and visual question-and-answer sessions. Google asserts that Gemma 2 performs better than devices twice its size even though it is smaller than some of its rivals.

The model has been optimized by the business for multiple hardware platforms, such as Vertex AI, Nvidia GPUs, and Google Cloud TPUs. Although comprehensive performance data is not yet available, Google highlights the Gemma family's strong early performance and millions of downloads.

That’s all the coverage from the Google I/O 2024 event. Stay updated with us on the latest tech happenings around the world. Royex Technologies is a leading mobile app and e-commerce development company in Dubai. We have the skill and expertise to deliver you a quality finished product that will grow your business. For more information please visit our website at www.royex.ae or call us now at +971566027916.